tags:

- ArchModern CPU Evolution (ENG)

Notes on Unlocking Modern CPU Power, very interesting talk, highly recommended!

Ten Years of CPU Evolution

We got this two CPUs die shot a decade of server processor advancements. We serve here the Intel Xeon E5-2600 v2 from 2013 and Intel Xeon Sapphire Rapids from 2023.

2013 Server CPU: Intel Xeon E5-2600 v2 (Ivy Bridge-EP)

Back in the day, server CPUs were always monolithic, meaning all cores were build on a single die. In 2013, Intel released the Ivy Bridge-EP Xeon E5-2600 v2 processors for high performance servers. This processor features:

- Up to 12 cores (10 cores version here)

- 30MB of L3 cache (positioned in the center of the cores)

- Manufactured on a 22nm process

This image below shows a 10-core variant of this CPU, all cores are integrated on a single die:

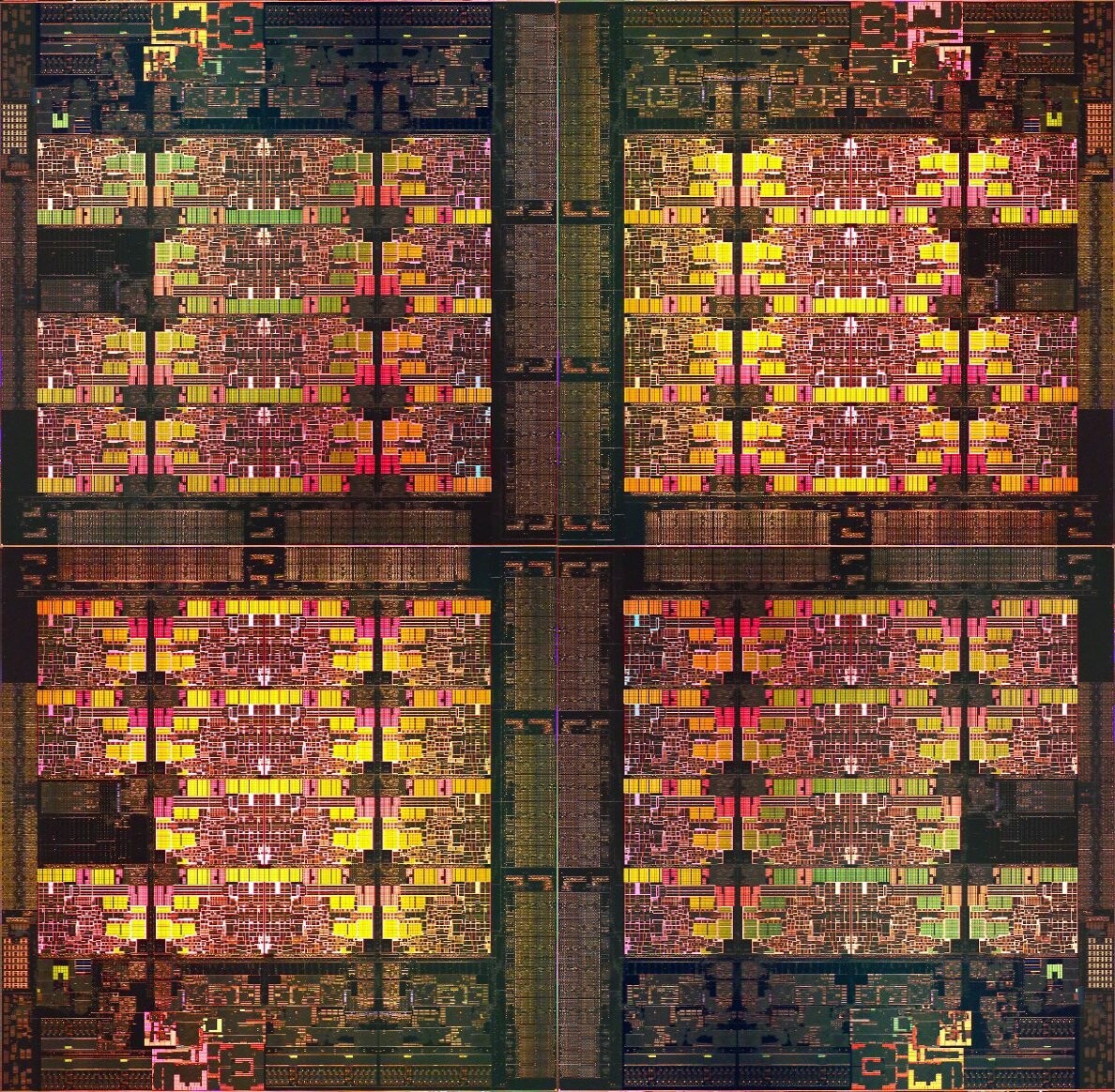

2023 Server CPU: Intel Xeon Sapphire Rapids

In 2023, the process node technology has improved a lot, but the fundamental microarchitecture on a single die hasn't changed significantly. However, CPU architecture itself has transformed--moving away from monolithic designs toward chiplet-based structures.

The uArch is changing. We now have four-die (tile) structure, where each die contains 15 cores, totaling 56 cores across the entire processor. The L3 cache is on top of the whole die.

Comparisons

With the advancement in manufacturing processes, transistor density has increased significantly, leading a higher performance while maintaining similar die size(2013 - one die; 2023 multiple die in a package).

We now have much larger caches. In 2024, the AMD server (EPYC IV) processor features 1GB of shared cache and 96MB of L2 cache (1MB per core), while 10 years ago, we only had 30MB of L3 cache. (Make-up for slow memory)

Beyond Computation: The Expanding Features of Modern CPUs

In this section, we'll talk about how your to write better code with a little bit of understanding of modern CPU. First things first, let's dive into the superscalar stuff.

Superscalar Execution

All modern CPUs are superscalar, meaning multiple independent instructions can be executed within a single clock cycle. For example:

for(size_t i =; i < N; ++i) {

x = a[i] + b[i];

y = a[i] - b[i];

a[i] += 10;

b[i] <<= 3;

}

This loop contains multiple operations that modern CPUs can execute in parallel within the backend of a CPU. A typical CPU features multiple types of ALUs, allowing diverse computations to occur simultaneously. Provided there are no dependencies between these instructions, the CPU can dispatch different instructions to various ALUs (execution units), optimizing execution efficiency.

If a task is memory-bound, meaning its performance is primarily limited by memory access speed rather than CPU computation, then superscalar execution has minimal impact.

Luckily, CPU caches have our back!

Caches

Typically, modern CPU have three layers of cache within the processor: L1, L2 and L3. These caches help reduce memory latency and increase data access speed. They provide a prefetching mechanisms, allowing the CPU to predict and load data ahead of time, especially when memory access is sequential. If memory access is random, the prefetch prediction could be defeated. (Vectors are the best most of the times)

Pipelining and Branching

Inside a CPU, we have four stages to execute an instruction——fetch, decode, execute, and write back. Each of these stages have several sub-stages that enable CPU pipelining, allowing multiple instructions to be processed in different execution sub-stages simultaneously.

But when the CPU encounters a branch instruction, it must determine which part of the program to jump to, or the pipeline will stall. Modern CPUs have branch predictors and speculative execution to prevent pipeline stalls, and normally, you get fairly accurate predictions, making the pipeline run smoothly.

But if the branch prediction is wrong, the CPU then must flush the pipeline, discarding incorrectly fetched instructions.This results in a performance penalty, also called a misbranching penalty. The longer the pipeline is, the greater the penalty will be.

To avoid this penalty, we need to write code that makes branch predictable. Or if you cannot ensure which branch would be taken, then you should make every possible code path is branchless. (Avoiding condition instruction, condition data is fine)

Branchless code often executes more instructions than branch-based code because it always computes both possible outcomes, regardless of whether they are needed. However, this isn't necessarily a drawback in modern CPUs (no misbranching penalty + superscalar execution).